How do infants represent and understand the visual world?

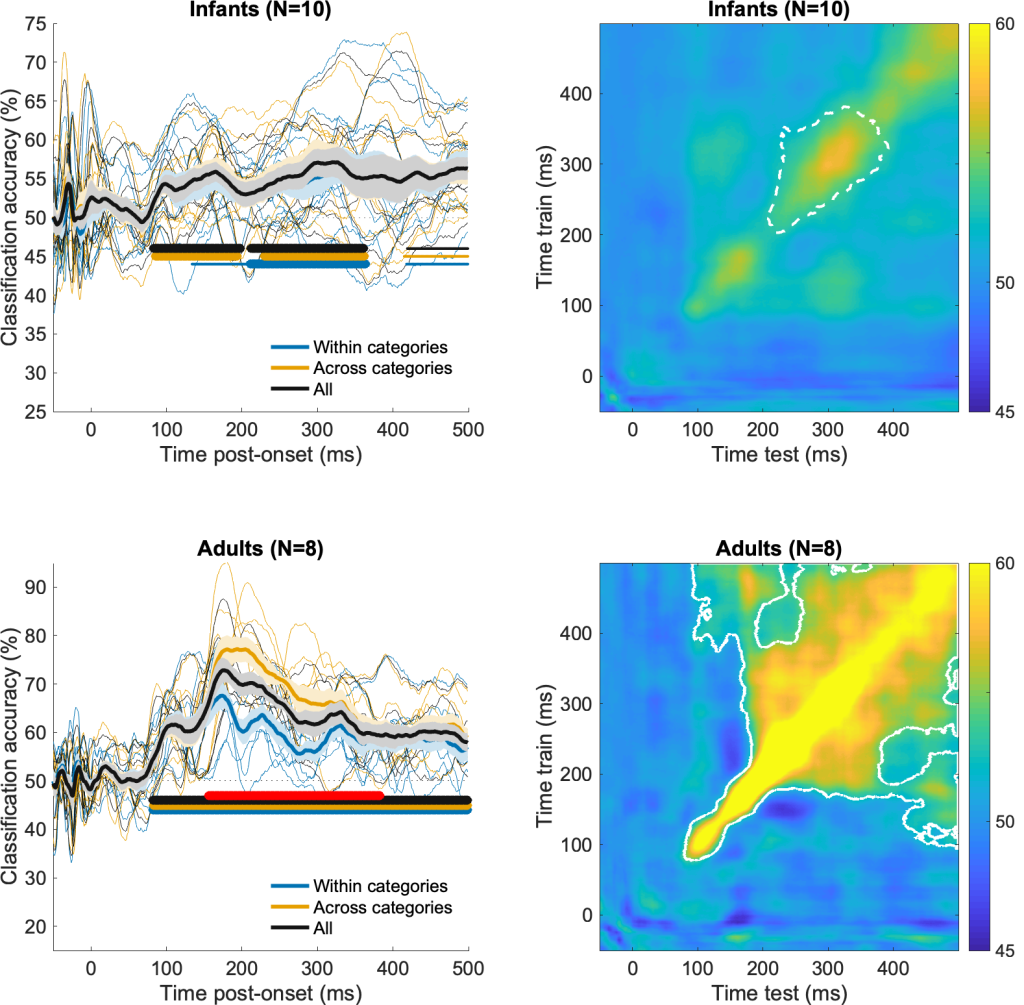

Vision is an essential domain of human intelligence. We recognize visual objects (e.g., a cat or a cup) quickly, often with no or little apparent effort. Current best models of human vision (deep neural networks) are trained in ways that differ from how humans actually learn from infancy and beyond. With support from the National Science Foundation (BCS 2122961), we ask: How do infants represent the visual world around them, and how is this process shaped by the social-communicative (e.g., linguistic) context? Answering this question will give us fundamental insights into the perceptual and cognitive strengths and constraints that shape how infants understand and interact with the world. These insights will contribute to inform models of how human vision is achieved through evolution, development, and learning.

- Ashton K., Zinszer B. D., Cichy R. M., Nelson C. A., Aslin R. N., Bayet L. (2022) Time-resolved multivariate pattern analysis of infant EEG data: A practical tutorial. Developmental Cognitive Neuroscience, 101094. doi: 10.1016/j.dcn.2022.101094

- Bayet L., Zinszer B., Reilly E., Cataldo J. K., Pruitt Z., Cichy R. M., Nelson C. A., & Aslin R. N. (2020) Temporal dynamics of visual representations in the infant brain. Developmental Cognitive Neuroscience 45, 100860. doi: 10.1016/j.dcn.2020.100860

What are the mechanisms for representing facial movements from visual input early in life?

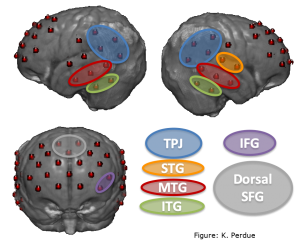

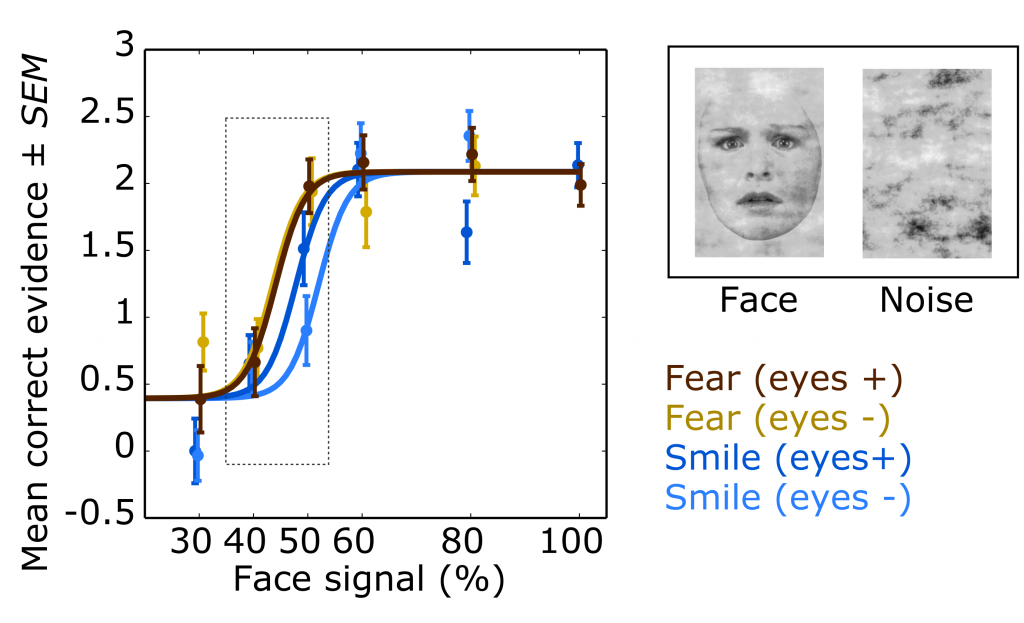

While we know that people use facial movements to infer affect, how those facial movements are extracted from visual input and represented in neural activity remains unclear. This is important because models of “facial emotion” understanding tend to presuppose facial movement representations as their input. That is, current computational models of “facial emotion” understanding do not take into account the visual mechanisms – and associated uncertainty, computational trade-offs, and developmental trajectory – by which patterns of facial movements, also called “action units”, are extracted from visual input. However, learning to represent facial movements – especially across different models – poses a complex challenge to the developing brain, engaging a set of perceptual skills known as high-level vision. How do infants and young children represent facial movements? How may these representations facilitate or constraint infants’ and young children’s ability to learn from other people’s facial expressions?

- Bayet L.*, Perdue K.*, Behrendt H. F., Richards J. E., Westerlund A., Cataldo J. K., & Nelson C. A. (2021) Neural responses to happy, fearful, and angry faces of varying identities in 5- and 7-month-old infants. Developmental Cognitive Neuroscience. 47, 100882. doi: 10.1016/j.dcn.2020.100882

- Bayet L., Behrendt H., Cataldo J. K., Westerlund A., & Nelson C. A. (2018) Recognition of facial emotions of varying intensities by three-year-olds. Developmental Psychology 54, 2240-2247

doi: 10.1037/dev0000588 - Bayet L., Pascalis O., Quinn P.C., Lee K., Gentaz E., & Tanaka J. (2015) Angry facial expressions bias gender categorization in children and adults: behavioral and computational evidence. Frontiers in Psychology 6, 346 doi: 10.3389/fpsyg.2015.00346

* Equal contributions

What are the content & implications of infants’ understanding of affective expressions?

Infants detect facial movements as early as birth, and perceptually categorize some facial expressions of affect as early as 5- to 6-months. However, little is known about what infants understand about these expressions. If infants understand facial expressions as affective, then models of affective cognition should account for their responses, and individual differences should reflect liability for atypical affective development. Studies in older children and adults have shown that the sensitivity of threat-related facial expressions is altered in disorders such as anxiety, suggesting that affective communication provides a window into broader aspects of affective functioning. What emotional or affective meaning do infants and young children derive from affective expressions? What are the origin and developmental, clinical implications of that knowledge?

- Bayet L., & Nelson C. A. (2019) The perception of facial emotion in typical and atypical development. In: LoBue V., Perez-Edgar K., & Kristin Buss K. (Eds.), Handbook of Emotional Development. pp 105-138. doi: 10.1007/978-3-030-17332-6_6

- Behrendt H. F., Wade M., Bayet L., Nelson C. A., & Bosquet Enlow M. (2019) Pathways to social-emotional functioning in the preschool period: The role of child temperament and maternal anxiety in boys and girls. Development and Psychopathology 26, 1-14. doi: 10.1017/S0954579419000853

- Bayet L., Quinn P.C., Laboissière R., Caldara R., Lee K., & Pascalis O. (2017) Fearful but not happy expressions boost face detection in human infants. Proceedings of the Royal Society B: Biological Sciences 84, 20171054 doi: 10.1098/rspb.2017.1054

Integrating computational tools for developmental cognitive (neuro)science

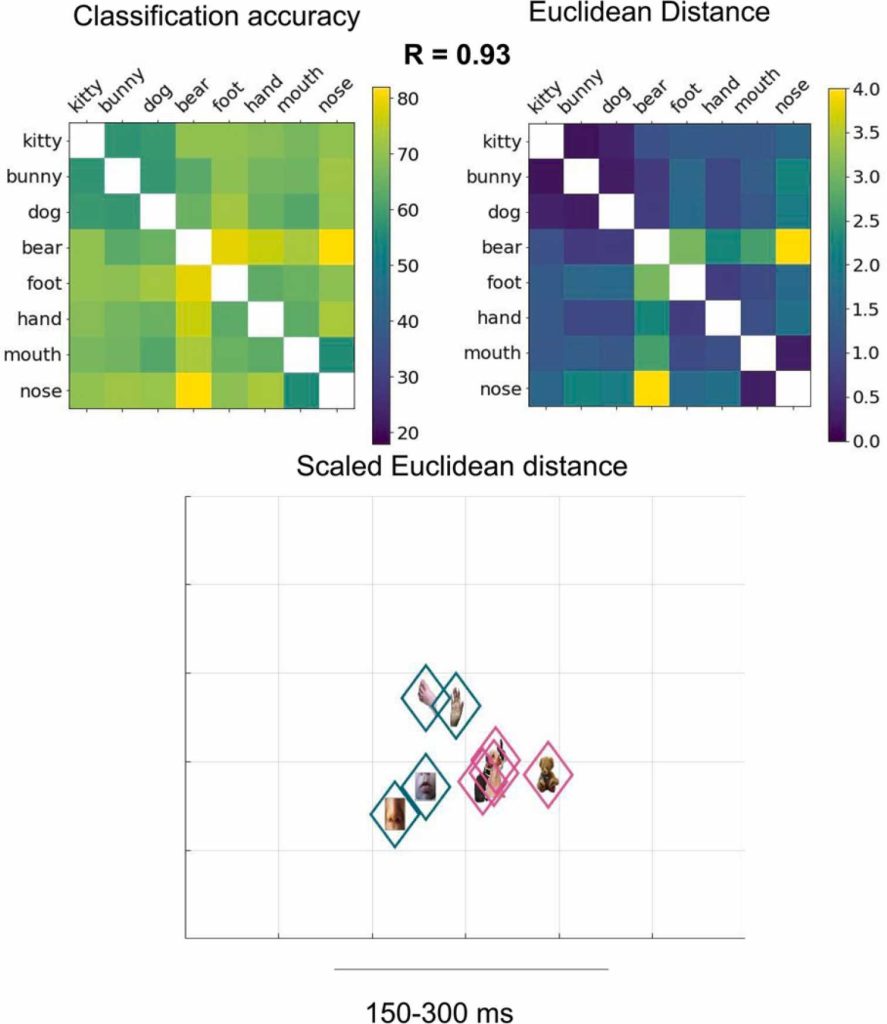

Methodological limitations constraint the scientific questions that we can ask; conversely, methodological innovations open new avenues of inquiry. As part of a consortium of developmental scientists, we seek to integrate modern computational tools for the analysis and modeling of developmental data, such as multivariate pattern analysis (MVPA) of near-infrared spectroscopy (fNIRS) and electroencephalography (EEG) data, to ask new questions about how the developing brain represents complex stimuli.

- Ashton K., Zinszer B. D., Cichy R. M., Nelson C. A., Aslin R. N., Bayet L. (2022) Time-resolved multivariate pattern analysis of infant EEG data: A practical tutorial. Developmental Cognitive Neuroscience, 101094. doi: 10.1016/j.dcn.2022.101094

- Bayet L., Zinszer B., Reilly E., Cataldo J. K., Pruitt Z., Cichy R. M., Nelson C. A., & Aslin R. N. (2020) Temporal dynamics of visual representations in the infant brain. Developmental Cognitive Neuroscience 45, 100860. doi: 10.1016/j.dcn.2020.100860

- Bayet L., Zinszer B. D., Pruitt Z., Aslin R. N. & Wu R. (2018) Dynamics of neural representations when searching for exemplars and categories of human and non-human faces. Scientific Reports 8, 13277 doi: 10.1038/s41598-018-31526-y

- Zinszer B. D., Bayet L., Emberson L. L., Raizada R. D. S., & Aslin R. N. (2017) Decoding semantic representations from fNIRS signals. Neurophotonics 5, 011003 doi: 10.1117/1.NPh.5.1.011003